15 techniques plus tons of tools to spot AI-generated text, videos, images and audio in OSINT investigations.

After the public release of ChatGPT, the adoption of generative AI technology has witnessed a tremendous boost. Individuals and organizations began using it widely in various contexts, such as customizing customers' experiences and increasing employees' productivity. Nevertheless, there are numerous cases where generative AI can be adopted in the dark side. Generating fake content is among the top threats of this technology.

When discussing OSINT challenges from the online investigators' perspective, the most prominent two challenges appear in the sheer volume of public data and their trustworthiness. The generative AI technology can contribute to increasing both challenges: generating massive amounts of content at little cost — which could be fake!

In this guide, I will discuss how OSINT investigators can use various methods, tools and techniques to spot fake content spread across the web. However, before I begin, let me briefly discuss why threat actors spread phony content.

Looking for how to leverage AI in OSINT investigations? Check out this blog >

Why do threat actors spread fake content?

Fact-checking public information is a vital challenge faced by OSINT investigators. When gathering public data, OSINT gatherers cannot trust the information they collect from one source. They need to inspect different sources and use their analytical skills to verify the information they have collected before they can consider it valid.

There are different motivations why threat actors — and other parties — want to spread fake information. Here are some reasons:

- Impact public opinion: for example, spreading fabricated videos to mislead the public in elections (this happened recently to impact the coming U.S. election)

- Spread propaganda: terrorist organizations and organized criminal groups commonly use this tactic for different reasons

- Financial gain: spreading fake news, misleading statistics and other business figures to impact stock prices; for example, impacting a specific stock price to purchase it at a lower price

- Impact brands: spreading fake news to impact a specific brand; for example, posting a manipulated photo of a burned smartphone saying it suffers from a design problem to impacts its sales negatively

- Cyber espionage: state-sponsored actors may spread fake content to mislead intelligence agencies and OSINT gatherers from their real targets; for example, China may spread fake news in a specific area to draw foreign government agencies' attention from its true agenda

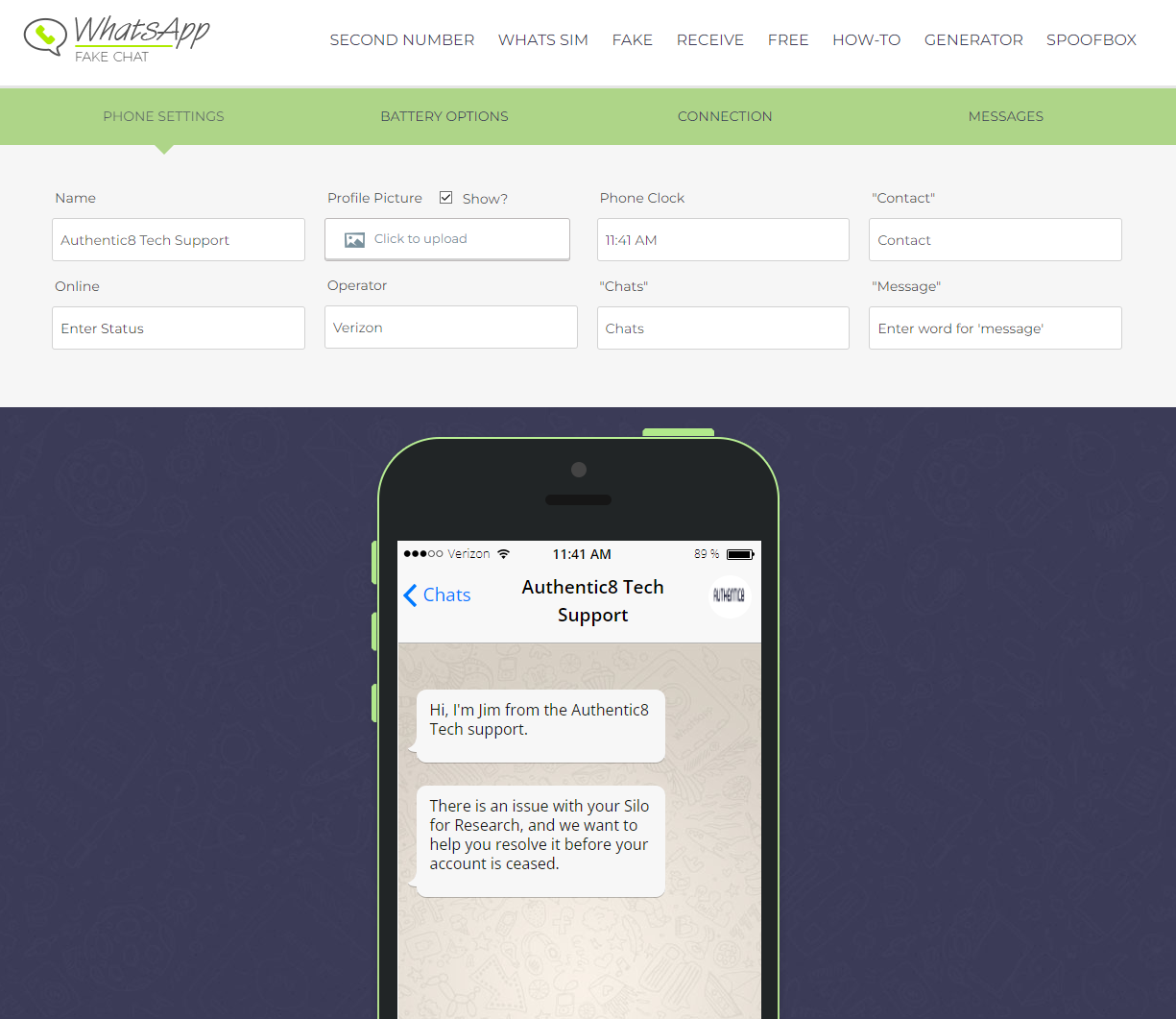

- Social engineering attacks: threat actors may use generative AI tools to create fake content to deceive people into revealing sensitive information such as login credentials; for example, Fakewhats allows the generation of fake screen captures of the popular WhatsApp application that can be used in different social engineering attacks (see Figure 1)

FIG 1 | Generating fake WhatsApp chat conversation

These are the most popular reasons why threat actors spread phony content. The introduction of generative AI tools to the public contributes to increasing this risk significantly.

AI-generated content types

Generative AI tools can generate different types of content; the most prominent ones are:

- Text

- Images

- Videos

- Audio

AI tools can generate other content types, such as: 3D models, code and datasets for training other AI models. However, the first four content types are the ones we care about from OSINT's perspective.

Here is a description of what each content may contain:

Text

AI text-generative tools can produce different types of text content, such as articles, blog posts, news pieces, social media posts, email messages and ads.

Here are some AI text-generative tools:

Please note that I mention the main Large language models (LLM) behind generative AI tools. There are numerous tools powered by these LLMs that can provide different customization methods when using them. Such as:

- Jasper: Powered by the GPT model

- Writesonic: Powered by the GPT model

- Writer: Powered by Palmyra model

Images

AI image generation tools can create images from scratch (e.g., people, objects, scenes, artwork, drawings) and edit existing images based on text prompts supplied by the user. Here are some AI image-generation tools:

Videos

AI tools can create fake videos or swap faces on existing videos to manipulate them. Some tools allow the generation of videos from text prompts. Here are some AI-powered video generation tools:

Audio

AI tools allow converting human text into voice or cloning a specific human voice tone. This tactic has been used to execute social engineering attacks. The most notable one was the attack against a UAE bank that cost them $35 million.

Here are some AI audio generation tools:

How do we discover AI-generated content in OSINT gathering?

Despite the substantial technological advances and the ability to generate realistic images, videos and text, there are still many ways AI-generated content can be discovered. In this guide, I will cover tools and techniques to discover AI-generated content. The focus will be on detecting text and images.

Spotting AI-generated text content

AI-generated text, is the most popular type of generative AI technology. Here are some tips to discover such text:

- AI-generated text commonly uses a specific phrase or keywords many times within a short paragraph

- Look at the citation section if you are inspecting academic or scientific papers: AI tools may sometimes provide inaccurate or interrelated citations

- Text generated by AI tools is commonly generic; for example, if you read a blog post that looks too generic and does not contain opinions, statistics and/or real-world examples to describe some cases, then it could be generated by an AI tool.

- AI-generated text will commonly contain short sentences and paragraphs, and is often less complex than human-written content

There are some tools to discover AI-generated text, although they are not 100% foolproof. However, they can aid you when you are in doubt.

Spotting AI-generated images

The release of Adobe Photoshop in 1990 was considered a remarkable milestone in the history of developing programs for digital image manipulation. The later releases of the Photoshop program made it easier to perform numerous editing tasks on images by experienced users. However, after the emergence of AI technology, Adobe has incorporated AI generative capabilities into its cloud solution, Adobe Firefly. This allows ordinary users to manipulate images using advanced techniques by providing simple text prompts. While this capability significantly facilitated graphic designers' work, it opened the door for threat actors to exploit this feature to create fake images.

Fortunately, until now, images created with AI tools have unique looks that tech-savvy users can detect. Nevertheless, some AI-generated images can be challenging to detect easily, especially with the continual advancement in generative AI technology. In this section, I will propose some tips to discover AI-manipulated images:

- Check image metadata information: metadata contains data about the image itself, and AI tools may not generate accurate metadata or may insert random data (like a beach scene tagged with GPS coordinates in Japan)

- Look for unnatural characteristics: AI-generated images often have clear glossiness and sharp, exaggerated colors that lack natural variation

- Put your artist hat on: Inconsistent lighting and shadows that do not match the direction of light that appears in the image.

- The hands hold the secret: AI tools have problems creating natural human fingers because their datasets focus mainly on human faces (see Figure 2); this issue will be resolved in the future, but until then, always focus on the person's fingers when available in images

FIG 2 | Fingers appear strange in a photo-generated using the Stable Diffusion AI tool

- Blurred backgrounds: AI-generated images may use abnormal or blurred backgrounds, or different backgrounds assembled from unrelated images; for example, misaligned lines, furniture too big compared with their actual size, lighting (such as lamps) may appear in different sizes

- Look at a person's hair: Generally, it appears too thick in AI images and sometimes hovers high above a person's head, or it may lack natural gradients and textures

- Examine accessories, such as watches and jewelry: Watches may be attached unnaturally to the body, and jewelry may strangely float off the body

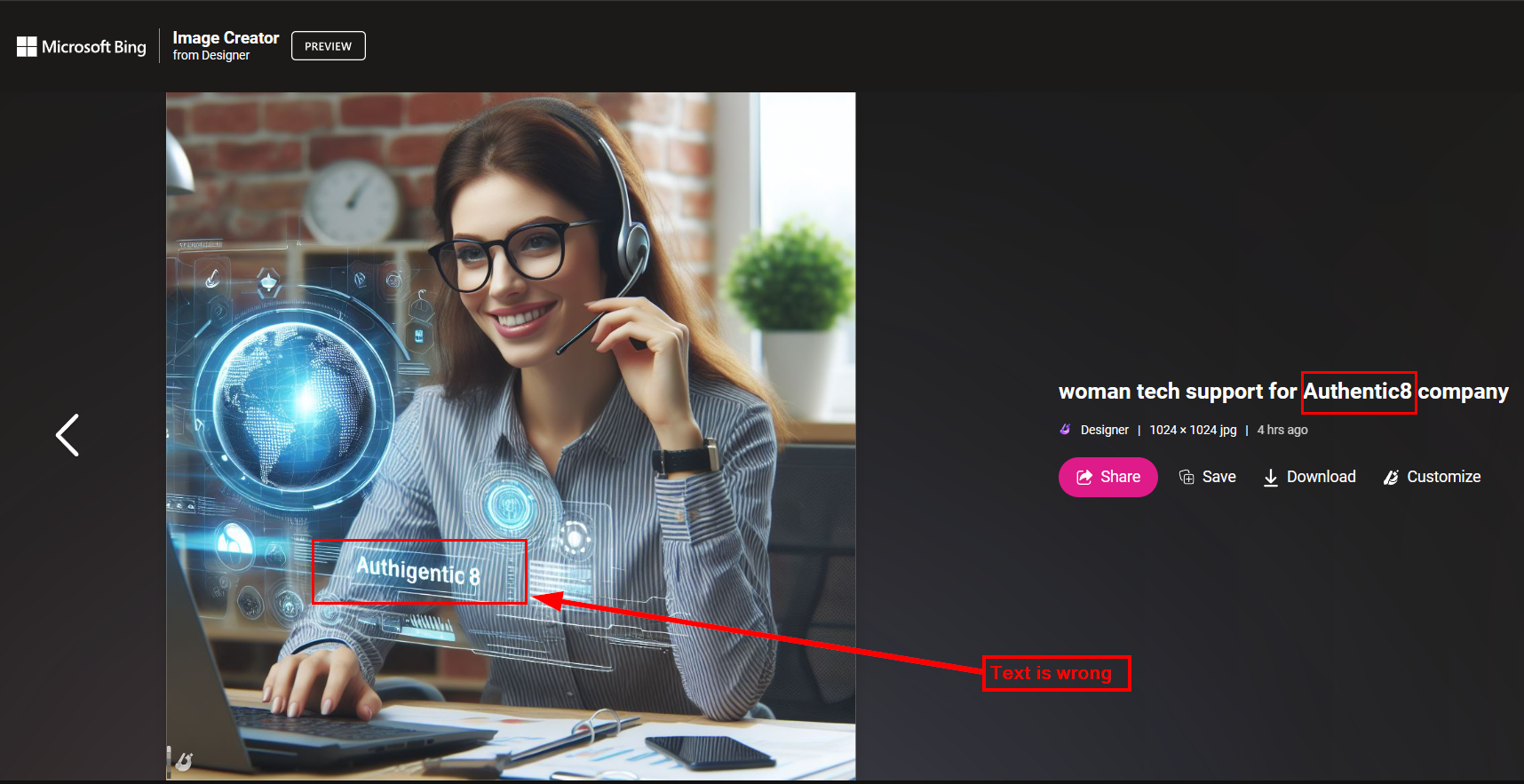

- Read the text that appears in the image: text that appears in AI images (e.g., on storefronts) sometimes is written using inconsistent fonts and styles; for example, I requested that the text "Authentic8" be added to AI-generated images using Microsoft Bing Image Creator, but it appeared written in a wrong way (see Figure 3)

FIG 3 | Look at the text in AI-generated images, If they are wrong, this is a hoax

- Same faces in the crowd: If the image contains a crowd, AI sometimes reuses the same face for many people

- Identical objects: Look at the objects in the image, such as pens, computers, phones, and any desk tools. This could be a fake image if you can find two identical objects.

- Random watermark: AI tools sometimes embed watermarks (e.g., logo, small image or text) somewhere in generated images

You should also conduct a reverse image search. This allows you to check the authentic source of the image and whether it appears elsewhere. There are many reverse image search engines like:

There are also some tools to detect AI-generated images:

- AI or Not: used to detect both image and audio contents

- Content at Scale

- AI Image Detector

- SynthID from Google

Listen to how this Bellingcat fellow tested tools to spot AI on this episode of NeedleStack >

Investigators may need to use other tools to aid them in discovering AI-generated images, such as:

- Metadata viewers: such as ExifTool and Exif Pilot

- Image zoom tools: such as: Pixelied Image Zoomer and AI Photo Zoomer and VanceAI Image Upscaler

Silo for Research provides its own built-in EXIF viewer extension >

Detecting AI-generated content cannot be guaranteed. However, by following the tips and utilizing the tools outlined in this guide, OSINT gatherers can significantly improve their ability to identify AI-generated content.

Tags OSINT research